-

Tips for becoming a good boxer - November 6, 2020

-

7 expert tips for making your hens night a memorable one - November 6, 2020

-

5 reasons to host your Christmas party on a cruise boat - November 6, 2020

-

What to do when you’re charged with a crime - November 6, 2020

-

Should you get one or multiple dogs? Here’s all you need to know - November 3, 2020

-

A Guide: How to Build Your Very Own Magic Mirror - February 14, 2019

-

Our Top Inspirational Baseball Stars - November 24, 2018

-

Five Tech Tools That Will Help You Turn Your Blog into a Business - November 24, 2018

-

How to Indulge on Vacation without Expanding Your Waist - November 9, 2018

-

5 Strategies for Businesses to Appeal to Today’s Increasingly Mobile-Crazed Customers - November 9, 2018

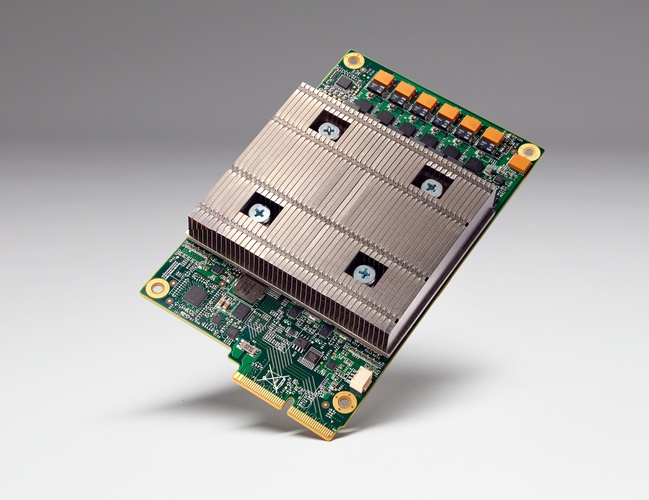

Google developed a processor to power its AI bots

A report published in PC World informed, “Forget the CPU, GPU, and FPGA, Google says its Tensor Processing Unit, or TPU, advances machine learning capability by a factor of three generations”.

Advertisement

Since its public release back in November, there has been considerable interest in using Google’s machine learning platform for a variety of tasks – not least inside Google itself.

According to company CEO Sundar Pichai, the TPU accelerators will never replace CPUs and GPUs but they can speed up machine learning processes with a fraction of the power draw required by other ASICs. In addition to it, voice recognition services and Cloud Machine Learning services of Google also run on Tensor Processing Unit chips.

It powers various applications at Google such as RankBrain (to improve the relevancy of search results in Google search), Google Street View ( to improve quality and accuracy of maps and navigation) and the famed AlphaGo artificial intelligence (AI) powered Go player that beat top-ranked Go player Lee Sedol this year.

In terms of applications, the TPU is considerably more capable of allowing for deep learning which can be used for everything from photo recognition to sending out automatic email responses. This can be used to have more sophisticated machine learning models and to get more precise results. One drawback, however, is that ASICs such as Google’s TPU are traditionally designed for highly-specific workloads. The accelerator chip, speeds up a specific task providing better performance per watt than existing chips for machine learning tasks. ‘We’ve been running TPUs inside our data centers for more than a year, and have found them to deliver an order of magnitude better-optimised performance per watt for machine learning. “This is roughly equivalent to fast-forwarding technology about seven years into the future (three generations of Moore’s Law)”, the blog said. Currently, Google has thousands of the chips in use.

Advertisement

Our goal is to lead the industry on machine learning and make that innovation available to our customers. A board with a TPU fits into a hard disk drive slot in our data center racks.