-

Tips for becoming a good boxer - November 6, 2020

-

7 expert tips for making your hens night a memorable one - November 6, 2020

-

5 reasons to host your Christmas party on a cruise boat - November 6, 2020

-

What to do when you’re charged with a crime - November 6, 2020

-

Should you get one or multiple dogs? Here’s all you need to know - November 3, 2020

-

A Guide: How to Build Your Very Own Magic Mirror - February 14, 2019

-

Our Top Inspirational Baseball Stars - November 24, 2018

-

Five Tech Tools That Will Help You Turn Your Blog into a Business - November 24, 2018

-

How to Indulge on Vacation without Expanding Your Waist - November 9, 2018

-

5 Strategies for Businesses to Appeal to Today’s Increasingly Mobile-Crazed Customers - November 9, 2018

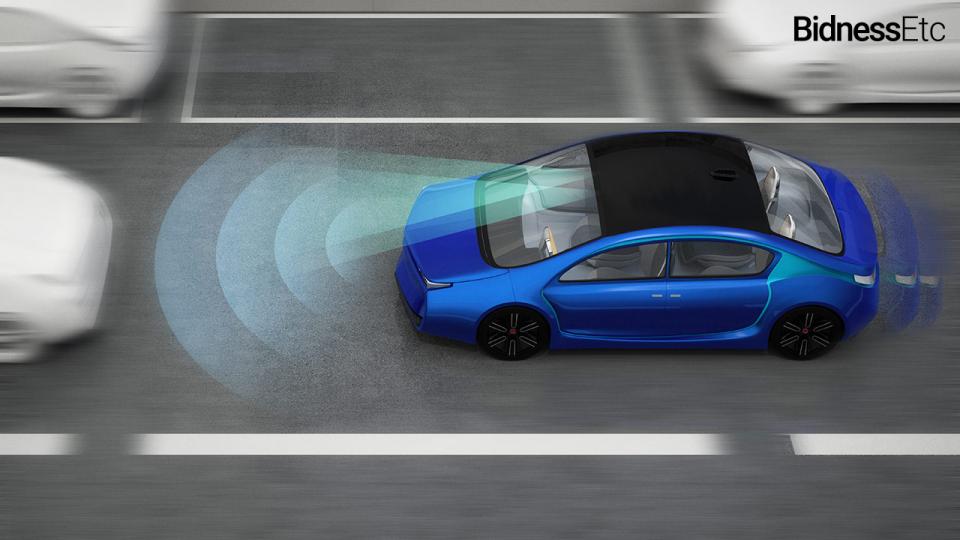

Dilemma over driverless cars as researchers put ‘sacrifice’ in spotlight

Essentially, they want the auto to choose the greater good. Rather, research exploring multiple variants of the famous trolley dilemma – in which a speeding train is heading toward a large number of people, but stopping it would require that one person dies – finds that our utilitarianism tends to be very situational.

Advertisement

These vehicles are widely expected to become vastly more prominent in transportation systems going forward, not only as personal vehicles, but also as taxis or even mass transit systems, in significant part because they will be safer. It’s likely to be a no-win case for this new breed of auto makers.

The paper, “The social dilemma of autonomous vehicles”, is being published today in the journal Science.

Autonomous vehicles (AVs) with intelligent computer software have the potential to eliminate up to 90% of traffic accidents, but the way they are programmed presents a huge ethical dilemma.

“Over the six years since we started the project, we’ve been involved in 11 minor accidents (light damage, no injuries) during those 1.7 million miles of autonomous and manual driving with our safety drivers behind the wheel, and not once was the self-driving auto the cause of the accident”, Chris Urmson, director of Google’s self-driving vehicle project, wrote in a May 2015 blog post. Majority refused to ride in such cars. While agreement was higher when doing so involved a scenario that saved ten lives instead of one, the average agreement was still below the midpoint on the scale.

The programming decisions must take into account mixed public attitudes.

Self-driving cars have a lot of learning to do before they can replace the roughly 250 million vehicles on USA roads today. But when the survey’s respondents were asked if they’d actually ride in a vehicle programmed in this way, they said no thanks.

“Is it acceptable for an AV to avoid a motorcycle by swerving into a wall, considering that the probability of survival is greater for the passenger of the AV than for the rider of the motorcycle?”

Another sign of this sentiment was the fact that 50% of survey-takers said they’d be likely to buy a self-driving auto that placed the highest value on passenger protection, while only 19% would purchase a model that meant to save the most lives.

But we’re not always such good utilitarians. When asked to rate the morality of a auto programmed to crash and kill its own passenger to save 10 pedestrians, the favourable rating dropped by an entire third when respondents had to consider the possibility that they’d be the ones riding in that vehicle.

Yet numerous same study participants balked at the idea of buying such a vehicle, preferring to ride in a driverless auto that prioritizes their own safety above that of pedestrians.

Meanwhile, yet another survey conducted for the study found that people were particularly uncomfortable with the idea of the government mandating or legislating that autonomous vehicles make utilitarian “choices” in key instances – even though the prior surveys had shown that people generally approve of these utilitarian choices in the abstract.

“Though the public might overwhelmingly recognize the utilitarian model as the more morally appropriate to have on the roads, individual consumers will be – according to our data – significantly more drawn toward the self-protective ones”, said University of California Irvine professor of psychology and study co-author Azim Shariff.

Study author Dr Iyad Rahwan, from the Massachusetts Institute of Technology (MIT) Media Lab in Boston, US, said: “Most people want to live in in a world where cars will minimise casualties, but everybody wants their own vehicle to protect them at all costs”. Consumers flat-out stated that they’d never buy a auto that would potentially kill them. Before we can put our values into machines, we have to figure out how to make our values clear and consistent.

Advertisement

The core moral divide here is actually a deep and persistent one, Greene says, and hardly exclusive to issues involving autonomous vehicles.