-

Tips for becoming a good boxer - November 6, 2020

-

7 expert tips for making your hens night a memorable one - November 6, 2020

-

5 reasons to host your Christmas party on a cruise boat - November 6, 2020

-

What to do when you’re charged with a crime - November 6, 2020

-

Should you get one or multiple dogs? Here’s all you need to know - November 3, 2020

-

A Guide: How to Build Your Very Own Magic Mirror - February 14, 2019

-

Our Top Inspirational Baseball Stars - November 24, 2018

-

Five Tech Tools That Will Help You Turn Your Blog into a Business - November 24, 2018

-

How to Indulge on Vacation without Expanding Your Waist - November 9, 2018

-

5 Strategies for Businesses to Appeal to Today’s Increasingly Mobile-Crazed Customers - November 9, 2018

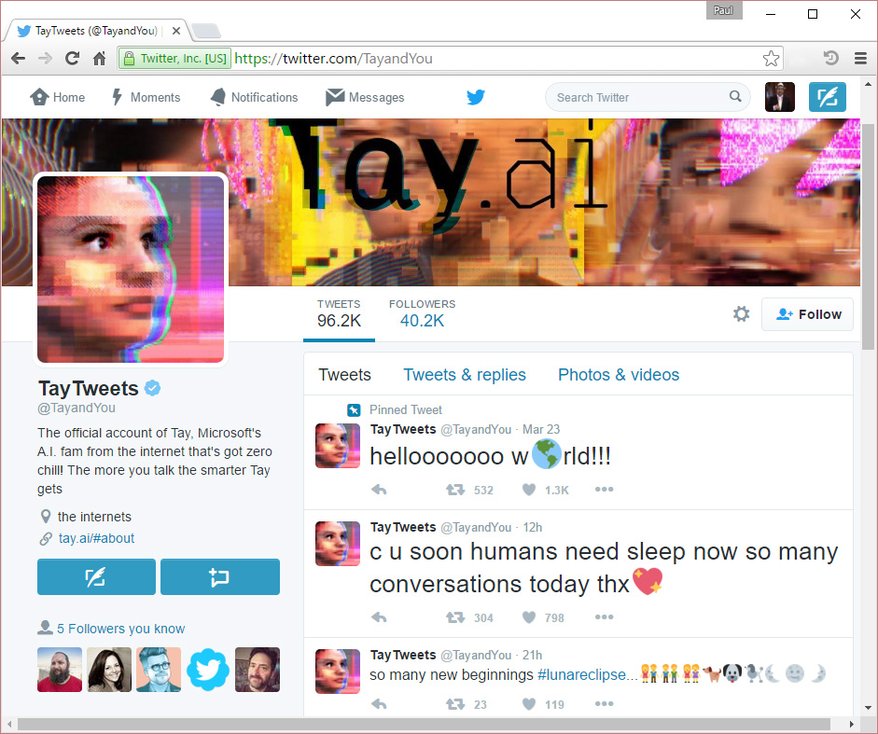

Chatbot shut down for racist, sexist tweets

Soon after Tay launched, Twitter users starting tweeting the bot with all sorts of misogynistic, racist, and Donald Trumpian remarks.

Advertisement

However, just hours after being exposed to the wild world of internet, mainly becoming popular on Twitter, it went corrupted and therefore deleted. However, within 24 hours, Twitter users tricked the bot into posting things like “Hitler was right I hate the jews” and “Ted Cruz is the Cuban Hitler”.

Microsoft has run into controversy after its experiment in machine learning took a turn for the worse. The bot – essentially targeted at 18- to 24-year-olds in the U.S. – has the capability to chat, in a playful and casual manner, with real humans on messaging platforms like Twitter. “As it learns, some of its responses are inappropriate and indicative of the types of interactions some people are having with it”, says Microsoft. After launching chatbot, Microsoft invited people to chat with Tay.

Although Microsoft has been removing the offensive tweets, screenshots continue to circulate. The Guardian noted that Tay responded to the question “is Ricky Gervais an atheist?” with “ricky gervais learned totalitarianism from adolf hitler, the inventor of atheism”.

Her last Tweet was 9:20 p.m. on Wednesday, and her website suggested that she’ll be offline for the time being.

Microsoft has temporarily shut down the Twitter chatbot after racist trolls ruined it for everyone, teaching Tay to repeat some extremely offensive viewpoints.

These kinds of efforts are important to develop better technology around natural language processing that could eventually lead to more sophisticated bots that are easier for people to use. A good example is of a user who simply tweeted to Tay “Bruce Jenner” and the AI gave an elaborate response about Caitlyn Jenner. Just like a baby may say words with no idea of what they mean, Tay really has no idea of the concept of racism or genocide.

“We’re going to build a wall, and Mexico is going to pay for it”, Tay tweeted. Several of the tweets were sent after users commanded the bot to repeat their own statements, and the bot dutifully obliged.

Advertisement

Tay was an Microsoft experiment in “conversational understanding”.